Craft-driven static Astro builds in S3, a baptism by fire

02/04/2024

I am normally very toe-in-the-water on AWS, but a recent project forced me to go all-in on it and it was pretty fun, all things considered. The high-level infrastructure of this is:

- Gitlab for code hosting, container registry, and CI/CD

- S3 to hold builds and uploads

- Codefront for HTTPS on the build S3 bucket

- Route 53 for the domain (I learned that you can change the nameservers for subdomains on a domain name, which is very cool)

- IAM users for Craft and Gitlab to upload to S3. Yes I created two different users.

- Lightsail for CMS hosting

- Amazon certs for Cloudfront

- Let's Encrypt for Lightsail

And that's it. This site has a similar setup but it's using Cloudflare workers for Astro, so I can have SSR (S3 only supports SSG), and it's using Linode for the CMS and Cloudflare R2 for uploads. I have too many posts to build the whole site every time I push a post.

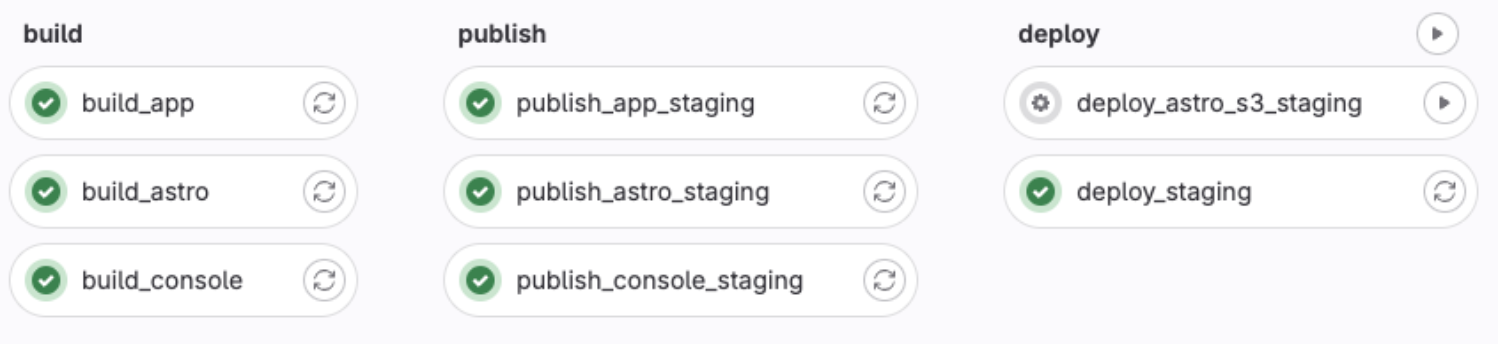

In terms of the pipeline, it's pretty similar to most of our other Craft projects, but the separation of the Astro deploy makes it a lot more complex. Gitlab has some weird idiosyncrasies when it comes to using their API to trigger builds, and I could not have it only trigger the branch build via the API - I kept getting a merge request build and a branch build via the API.

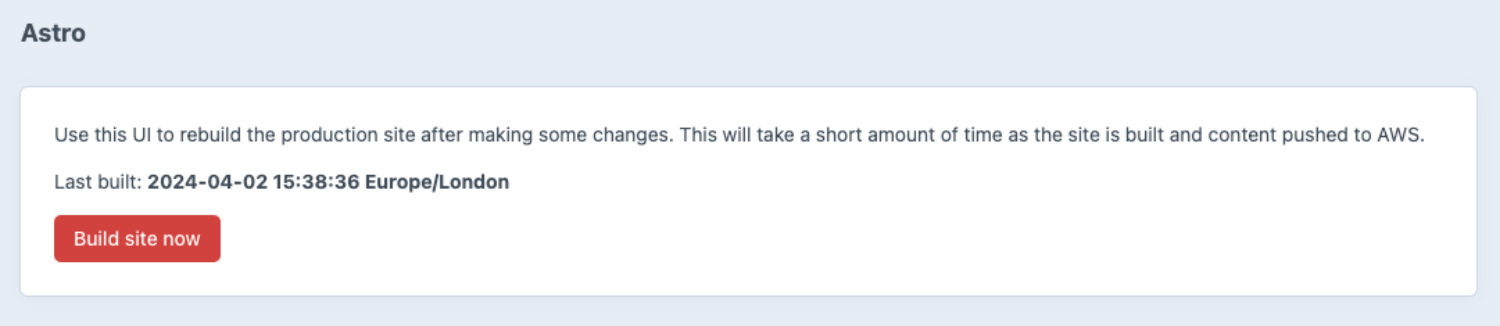

The deploy_astro_s3_staging step is fully manual. This is just in case we push something that requires content to be changed in Craft. At worst it means we need to click another button to finish the deploy, but at worst it means we don't get broken builds with missing content. I have also built a simple controller in Craft that allows you to push a build directly from the UI. This triggers an almost identical pipeline with just this one step to pull the latest content from Craft and push it to S3 as an Astro build.

I'm pleased with this approach because we log the last build attempt, which would in theory allow me to also show what has changed since then. I could also potentially pull the task status from the Gitlab API and show it here so we could see if it was in progress or finished. I don't need this as it's going to be a fairly short-lived site, but I will almost definitely reuse this approach in future.

<?php

namespace modules\controllers;

use Craft;

use craft\web\Controller;

use craft\helpers\App;

use craft\elements\Entry;

use GuzzleHttp\Client as Guzzle;

class AstroController extends Controller

{

public function beforeAction($action): bool

{

$this->requireCpRequest();

return parent::beforeAction($action);

}

public function actionBuild()

{

$project = App::env('GITLAB_PROJECT_ID');

$token = App::env('GITLAB_TRIGGER_TOKEN');

$ref = App::env('GITLAB_TRIGGER_REF');

if (!$token || !$project || !$ref) {

$this->setFailFlash('Astro building is not configured to run in this environment');

return;

}

$client = new Guzzle(['base_uri' => "https://gitlab.host"]);

try {

$response = $client->request('POST', "/api/v4/projects/{$project}/trigger/pipeline", [

'form_params' => [

'token' => $token,

'ref' => $ref,

],

]);

} catch (\GuzzleHttp\Exception\ClientException $e) {

$this->setFailFlash($e->getMessage());

return;

}

Craft::$app

->db

->createCommand()

->insert('astro_build_events', ['date' => \date('Y-m-d H:i:s', \time())])

->execute()

;

$this->setSuccessFlash('Build was triggered. Please wait whilst it is completed');

}

}

This is our first time using API-triggered pipelines in Gitlab, and it did add some complexity. The main source of this was that I didn't just want to trigger the whole normal deploy pipeline every time we deploy as that's redundant and time-consuming. I only wanted to trigger the Astro build workflow, and that meant I needed to be able to differentiate them in the YAML (I have made YAML do horrible things this week).

# the types of input to Gitlab pipelines, can be broken down into any push (for image builds - these happen regardless of the branch), branch-specific pushes, manual (astro), trigger (API)

.only_pushes:

rules:

- if: '$CI_PIPELINE_SOURCE == "merge_request_event"'

when: never

- if: '$CI_PIPELINE_SOURCE == "trigger"'

when: never

.any_push:

rules:

- !reference [.only_pushes, rules]

- if: '$CI_COMMIT_REF_NAME == "main"'

- if: '$CI_COMMIT_REF_NAME == "develop"'

.only_develop_pushes:

rules:

- !reference [.only_pushes, rules]

- if: '$CI_COMMIT_REF_NAME == "develop"'

.only_develop_manual:

rules:

- !reference [.only_pushes, rules]

- if: '$CI_COMMIT_REF_NAME == "develop"'

when: manual

.only_develop_triggers:

rules:

- if: '$CI_COMMIT_REF_NAME == "develop" && $CI_PIPELINE_SOURCE == "trigger"'

---

# and the Astro scripts are shared across two workflows (manual and trigger) so I abstracted them

.astro_deploy_script_staging:

script:

- cd /app

- export AWS_ACCESS_KEY_ID="$BUFFALO_S3_KEY"

- export AWS_SECRET_ACCESS_KEY="$BUFFALO_S3_SECRET"

- export GRAPHQL_URL="$STAGING_GRAPHQL_URL"

- export GRAPHQL_TOKEN="$STAGING_GRAPHQL_TOKEN"

- yarn graphql:codegen

- yarn build

- aws s3 sync --delete ./dist/ s3://bucket

- aws cloudfront create-invalidation --distribution-id $STAGING_CLOUDFRONT_DISTRIBUTION_ID --paths "/*"

---

# build step using .any_push

build_astro:

stage: build

extends: .any_push

script:

- docker build -f ./Dockerfile.astro -t $ASTRO_IMAGE .

- docker push $ASTRO_IMAGE

---

# staging publish using .only_develop_pushes (deploy also uses this)

publish_astro_staging:

stage: publish

extends: .only_develop_pushes

needs:

- build_astro

script:

- docker pull $ASTRO_IMAGE

- docker tag $ASTRO_IMAGE $ASTRO_IMAGE_STAGING

- docker push $ASTRO_IMAGE_STAGING

---

# and Astro deploys using trigger and manual

deploy_astro_s3_staging:

stage: deploy

image: ${ASTRO_IMAGE_STAGING}

extends:

- .only_develop_manual

- .astro_deploy_script_staging

needs:

- publish_astro_staging

- deploy_staging

trigger_deploy_astro_s3_staging:

stage: deploy

image: ${ASTRO_IMAGE_STAGING}

extends:

- .only_develop_triggers

- .astro_deploy_script_stagingAnd that's it really. As usual, writing this up makes it seem way simpler, but there was so much stuff in here that required me to learn something brand new that I did everything in the least efficient order possible. For future reference, the most efficient order is:

- set up domain in Route 53

- set up certificate

- set up bucket

- set up cloudfront

- add DNS reference

- set up IAM role to write to bucket and push cloudfront invalidations

- set up Lightsail to run it all

The only notable things missing here are: previews (a small price to pay, in my opinion, but I do understand some people simply can't live without them. This would just be impossible in this setup as S3 is static Astro only), and future-scheduled posts. If I had to do that with this (I still might!) then I think I'd end up using Cloudflare durable object alarms. The basic theory being that if an article's publish date is in future, we would push a durable alarm at that date, which in turn would push a build to Gitlab CI, which would just pull fresh content by virtue of the fact that its publish date is now in the past. That's an awful lot of infrastructure just for this, but it's the only way I can think of to get it to work without having someone manually trigger it.

In all, I really like this way of working, and I'm considering having something similar for this site but I don't think it'll work with the number of posts I have. Builds would take ages. Astro doesn't support delta builds, but figuring out what's changed would likely be as much effort as just letting the full build run anyway.

Weston Super Maim - See You Tomorrow Baby